|

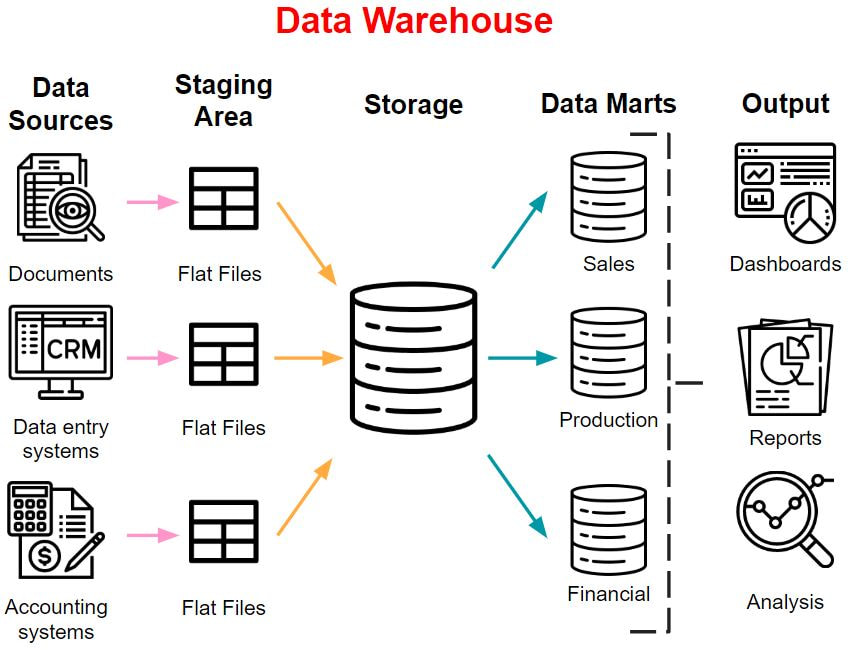

24/2/2020 5 Comments data warehousing

5 Comments

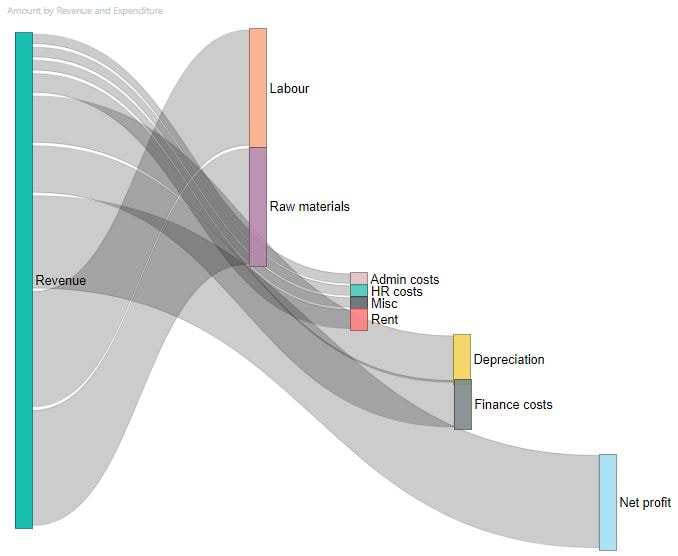

12/2/2020 2 Comments The Sankey Diagram

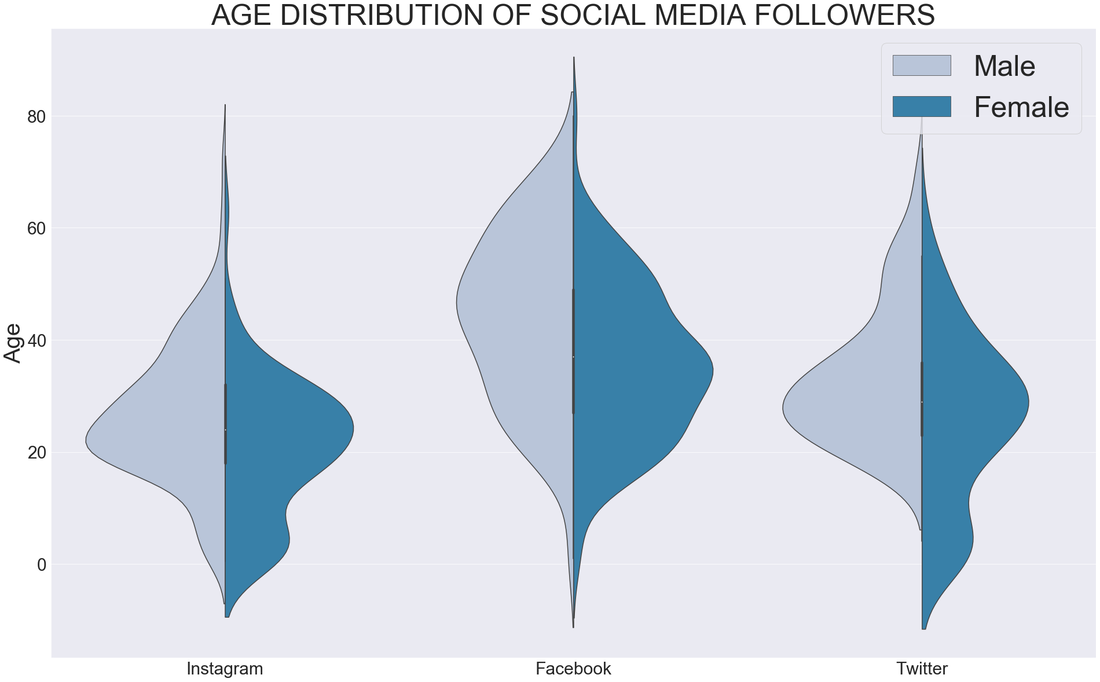

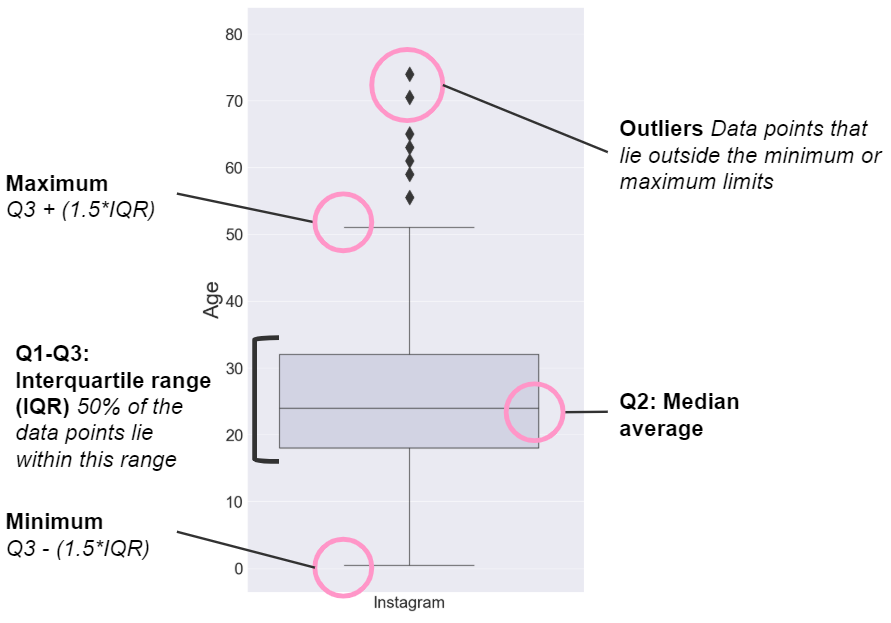

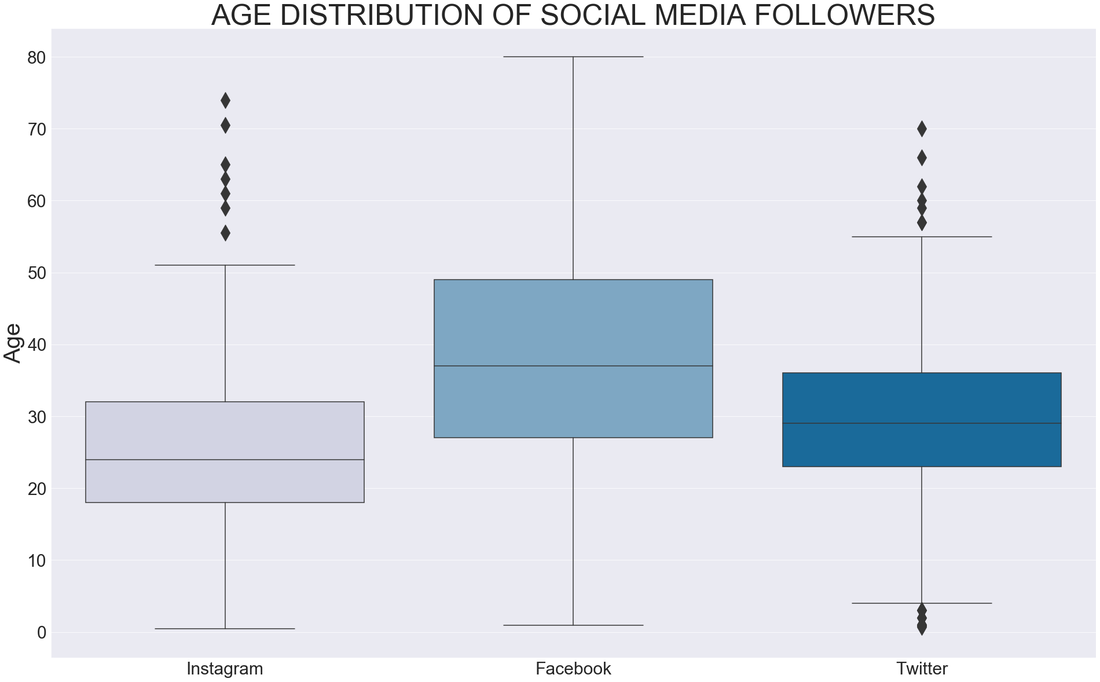

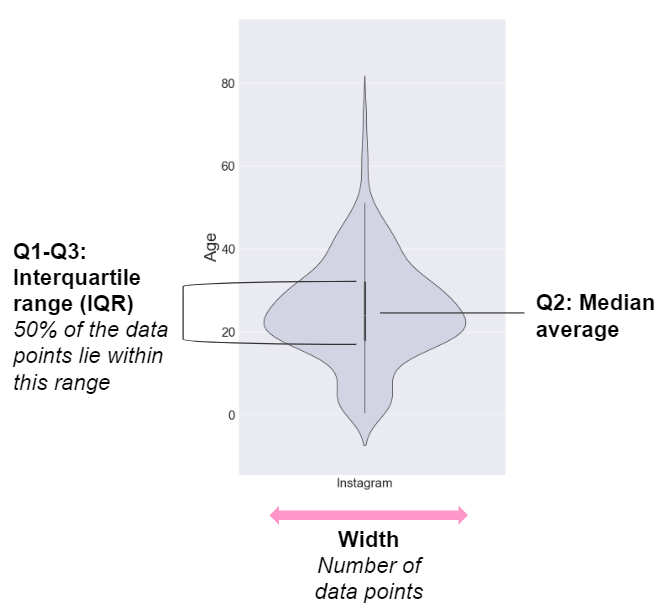

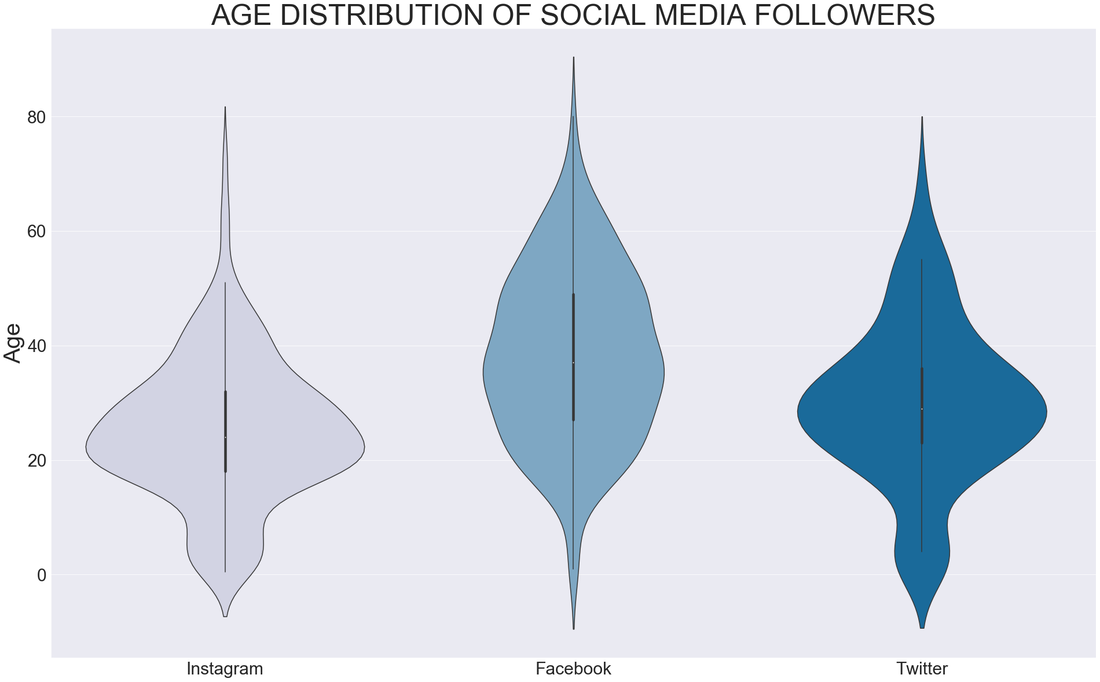

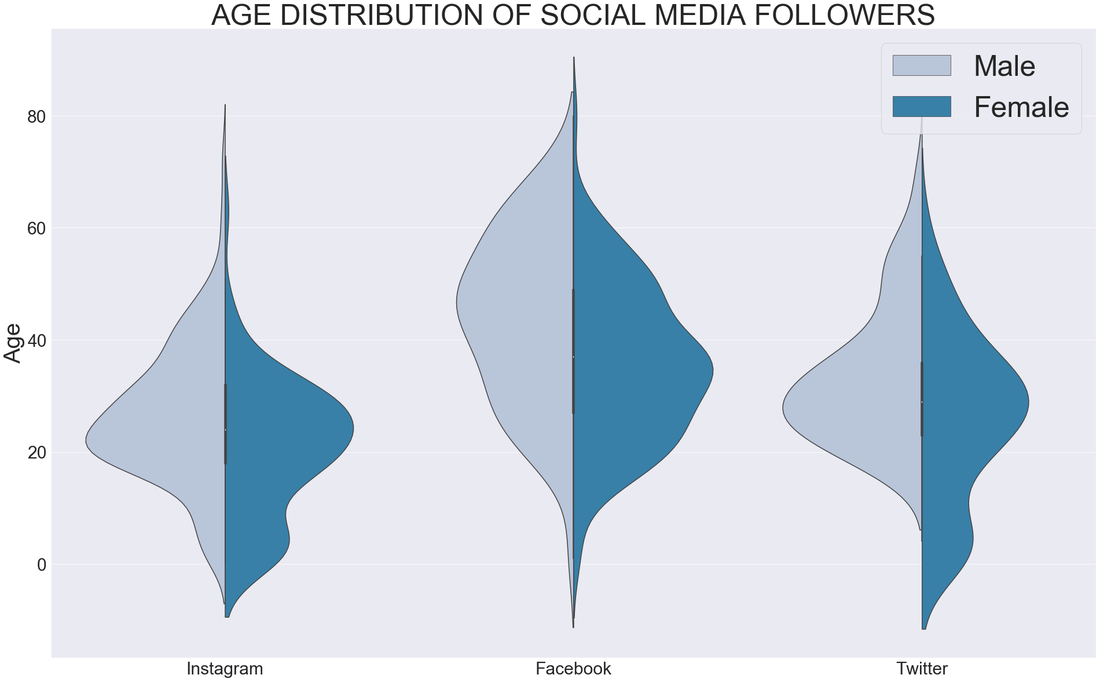

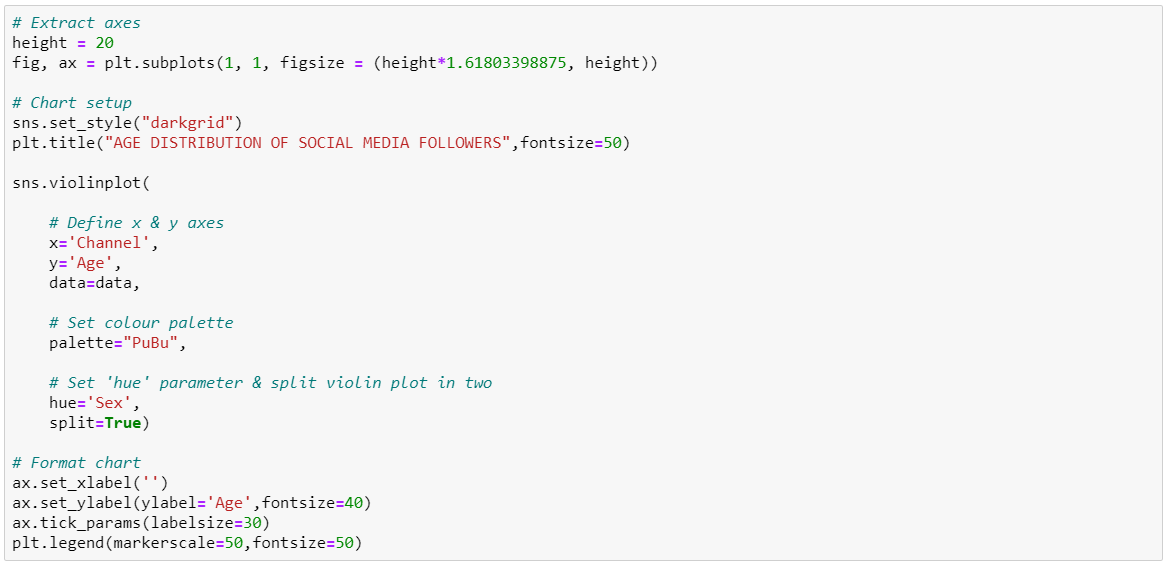

29/10/2019 2 Comments Violin plots

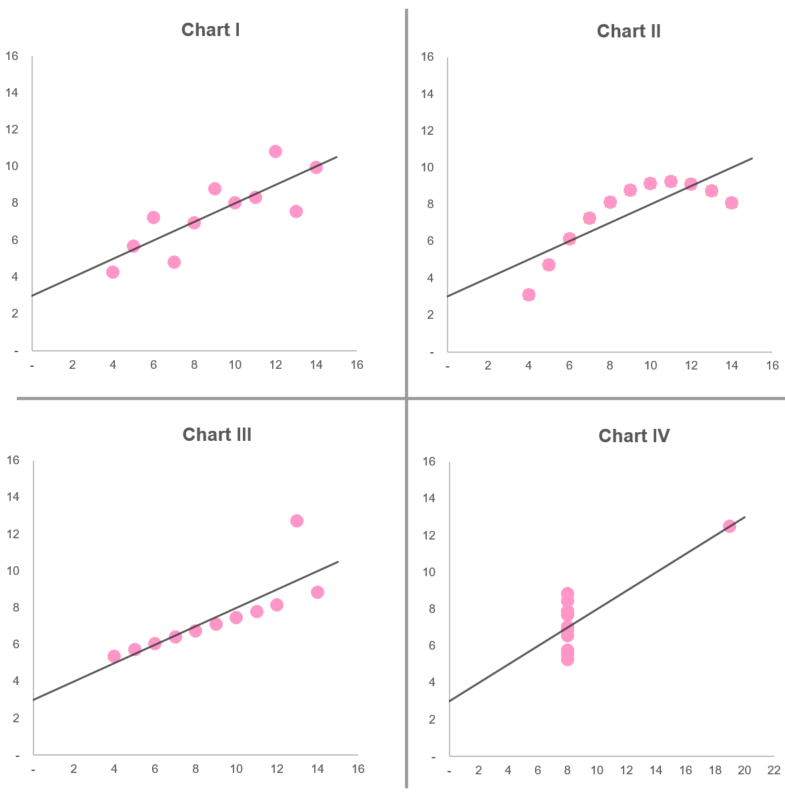

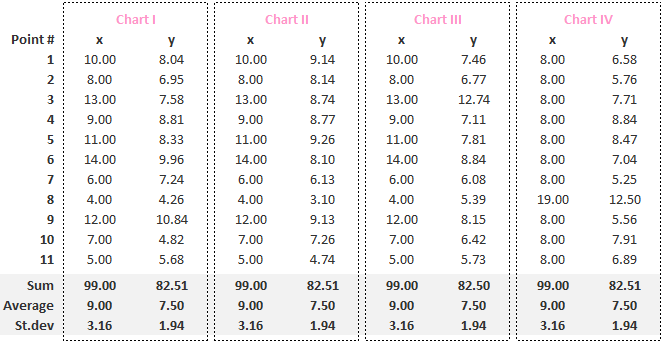

22/8/2019 2 Comments Anscombe's Quartet

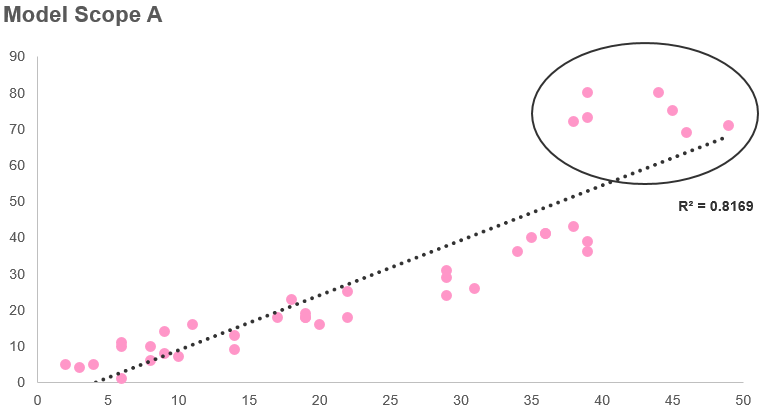

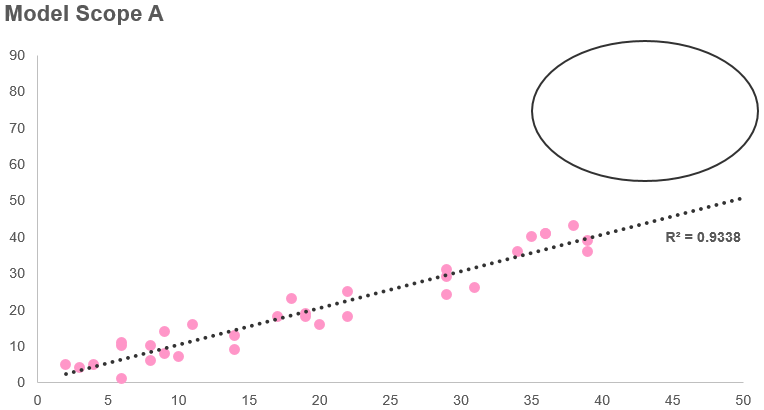

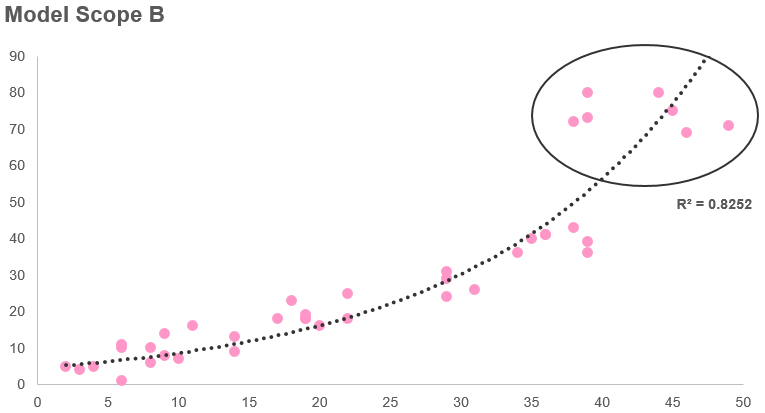

23/6/2019 0 Comments Dealing with outliers

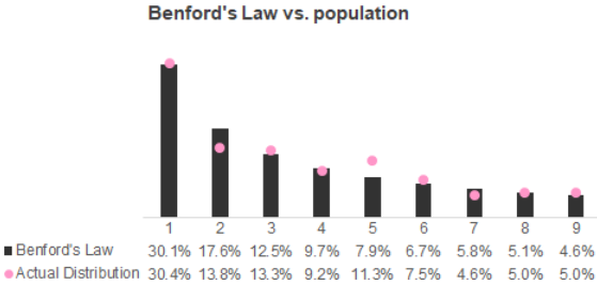

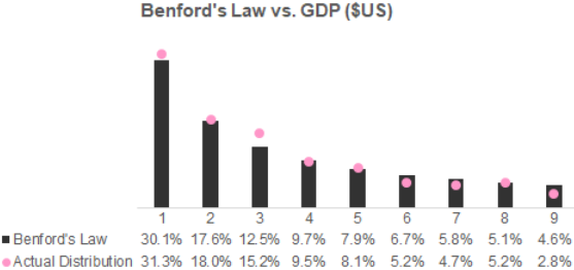

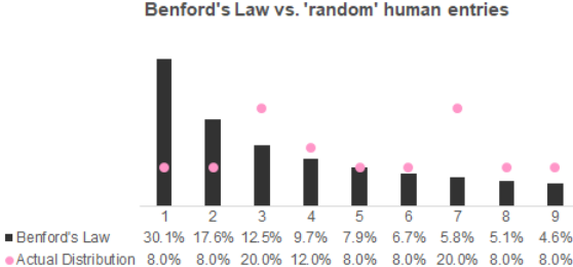

26/4/2019 2 Comments Benford's law |

AuthorLondon SODA Archives

February 2020

Categories |

RSS Feed

RSS Feed